This document consists of. Elements are in yellow color; attributes are in red color; node values are in green color; breakfast is the root node. Food is a child of the breakfast element. Name is a child of the food eleemnt. Price is next sibling of the name (they share the same parent). Name is the previous sibling of price (they share the same parent). $6.95 is the node value of the price. When it comes to browsing the web, the most commonly used.

This video covers pulling HTML elements from the DOM programmatically using PHP.If you want to do one of the following actions:- Receive 1 on 1 mentoring fro. First, we start off by loading the HTML using filegetcontents. Next, we use pregmatchall with a regular expression to turn the data on the page into a PHP array. This example will demonstrate scraping this web site's blog page to extract the most recent blog posts. Example 2 Prints all the links from a website with specific element (for example: python) mentioned in the link. Below program will print all the URLs from a specific website which contains “python” in there link.

- Related Questions & Answers

- Selected Reading

BeautifulSoup is a class in the bs4 module of python. Basic purpose of building beautifulsoup is to parse HTML or XML documents.

Installing bs4 (in-short beautifulsoup)

It is easy to install beautifulsoup on using pip module. Just run the below command on your command shell.

Web Scraping Php Examples

Running above command on your terminal, will see your screen something like -

To verify, if BeautifulSoup is successfully installed in your machine or not, just run below command in the same terminal−

Successful, great!.

Example 1

Find all the links from an html document Now, assume we have a HTML document and we want to collect all the reference links in the document. So first we will store the document as a string like below −

Now we will create a soup object by passing the above variable html_doc in the initializer function of beautifulSoup.

Now we have the soup object, we can apply methods of the BeautifulSoup class on it. Now we can find all the attributes of a tag and values in the attributes given in the html_doc.

From above code we are trying to get all the links in the html_doc string through a loop to get every <a> in the document and get the href attribute.

Below is our complete code to get all the links from the html_doc string.

Result

Example 2

Prints all the links from a website with specific element (for example: python) mentioned in the link.

Below program will print all the URLs from a specific website which contains “python” in there link.

Result

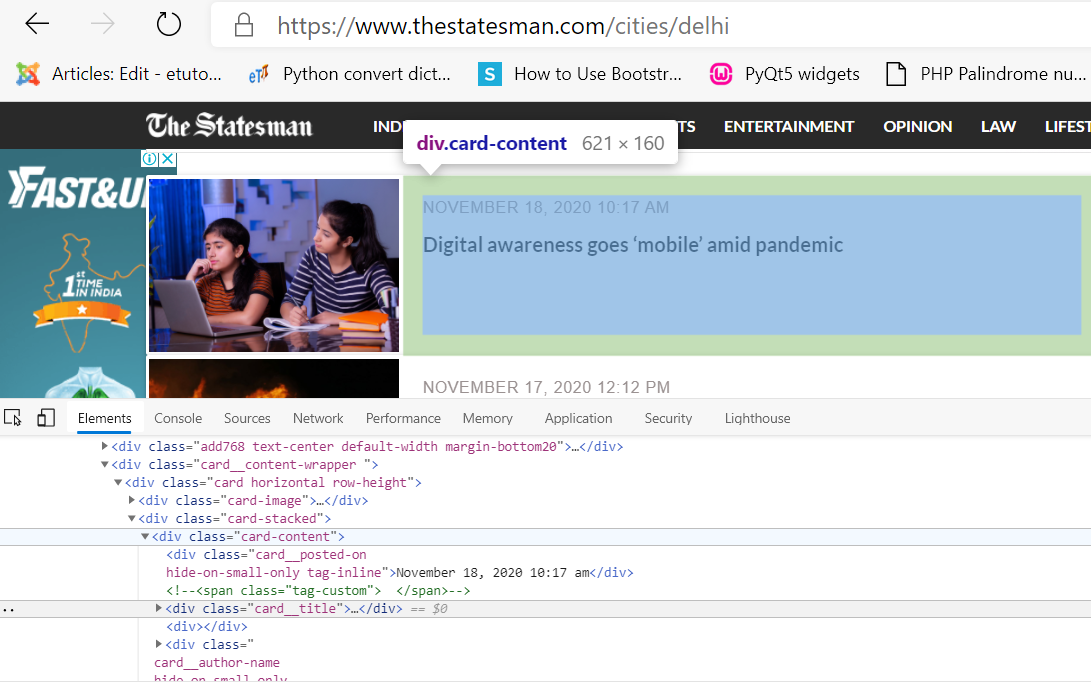

Web scraping relies on the HTML structure of the page, and thus cannot be completely stable. When HTML structure changes the scraper may become broken. Keep this in mind when reading this article. At the moment when you are reading this, css-selectors used here may become outdated.

Almost every PHP developer has ever scraped some data from the Web. Often we need some data, which is available only on some website and we want to pull this data and save it somewhere. It looks like we open a browser, walk through the links and copy data that we need. But the same thing can be automated via script. In this tutorial, I will show you the way how you can increase the speed of you scraper making requests asynchronously.

The Task

We are going to create a simple web scraper for parsing movie information from IMDB movie page:

Here is an example of the Venom movie page. We are going to request this page to get:

- title

- description

- release date

- genres

Web Scraping Php Example Code

IMDB doesn’t provide any public API, so if we need this kind of information we have to scrape it from the site.

Why should we use ReactPHP and make requests asynchronously? The short answer is speed. Let’s say that we want to scrape all movies from the Coming Soon page: 12 pages, a page for each month of the upcoming year. Each page has approximately 20 movies. So in common, we are going to make 240 requests. Making these requests one after another can take some time…

And now imagine that we can run these requests concurrently. In this way, the scraper is going to be significantly fast. Let’s try it.

Set Up

Before we start writing the scraper we need to download the required dependencies via composer.

We are going to use asynchronous HTTP client called buzz-react a library written by Christian Lück. It is a simple PSR-7 HTTP client for ReactPHP ecosystem.

For traversing the DOM I’m going to use Symfony DomCrawler Component:

CSS-selector for DomCrawler allows to use jQuery-like selectors to traverse:

Now, we can start coding. This is our start:

We create an instance of the event loop and HTTP client. Next step is making requests.

Making Request

Public interface of the client’s main ClueReactBuzzBrowser class is very straightforward. It has a set of methods named after HTTP verbs: get(), post(), put() and so on. Each method returns a promise. In our case to request a page we can use get($url, $headers = []) method:

The code above simply outputs the requested page on the screen. When a response is received the promise fulfills with an instance of PsrHttpMessageResponseInterface. So, we can handle the response inside a callback and then return processed data as a resolution value from the promise.

Unlike ReactPHP HTTPClient, clue/buzz-react buffers the response and fulfills the promise once the whole response is received. Actually, it is a default behavior and you can change it if you need streaming responses.

So, as you can see, the whole process of scraping is very simple:

- Make a request and receive the promise.

- Add a fulfillment handler to the promise.

- Inside the handler traverse the response and parse the required data.

- If needed repeat from step 1.

Traversing DOM

The page that we need doesn’t require any authorization. If we look at the source of the page, we can see that all data that we need is already available in HTML. The task is very simple: no authorization, form submissions or AJAX-calls. Sometimes analysis of the target site takes several times more time than writing the scraper, but not this time.

After we have received the response we are ready to start traversing the DOM. And here Symfony DomCrawler comes into play. To start extracting information we need to create an instance of the Crawler. Its constructor accepts HTML string:

Inside the fulfillment handler, we create an instance of the Crawler and pass it a response cast to a string. Now, we can start using jQuery-like selectors to extract the required data from HTML.

Title

The title can be taken from the h1 tag:

Method filter() is used to find an element in the DOM. Then we extract text from this element. This line in jQuery looks very similar:

Genres And Description

Genres are received as text contents of the corresponding links.

Method extract() is used to extract attribute and/or node values from the list of nodes. Here in ->extract(['_text']) statement special attribute _text represents a node value. The description is also taken as a text value from the appropriate tag

Release Date

Things become a little tricky with a release date:

As you can see it is inside <div> tag, but we cannot simply extract the text from it. In this case, the release date will be Release Date: 16 February 2018 (USA) See more ». And this is not what we need. Before extracting the text from this DOM element we need to remove all tags inside of it:

Here we select all <div> tags from the Details section. Then, we loop through them and remove all child tags. This code makes our <div>s free from all inner tags. To get a release date we select the fourth (at index 3) element and grab its text (now free from other tags).

The last step is to collect all this data into an array and resolve the promise with it:

Collect The Data And Continue Synchronously

Now, its time to put all pieces together. The request logic can be extracted into a function (or class), so we could provide different URLs to it. Let’s extract Scraper class:

It accepts an instance of the Browser as a constructor dependency. The public interface is very simple and consists of two methods: scrape(array $urls)) and getMovieData(). The first one does the job: runs the requests and traverses the DOM. And the seconds one is just to receive the results when the job is done.

Now, we can try it in action. Let’s try to asynchronously scrape two movies:

In the snippet above we create a scraper and provide an array of two URLs for scraping. Then we run an event loop. It runs until it has something to do (until our requests are done and we have scrapped everything we need). As a result instead of waiting for all requests in total, we wait for the slowest one. The output will be the following:

Web Scraping Ideas

You can continue with these results as you like: store them to different files or save into a database. In this tutorial, the main idea was to show to make asynchronous requests and parse responses.

Adding Timeout

Our scraper can be also improved by adding some timeout. What if the slowest request becomes too slow? Instead of waiting for it, we can provide a timeout and cancel all slow requests. To implement request cancellation we will use event loop timers. The idea is the following:

- Get the request promise.

- Create a timer.

- When the timer is out cancel the promise.

Now, we need an instance of the event loop inside our Scraper. Let’s provide it via constructor:

Then we can improve scrape() method and add optional parameter $timeout:

If there is no provided $timeout we use default 5 seconds. When the timer is out it tries to cancel the provided promise. In this case, all requests that last longer than 5 seconds will be cancelled. If the promise is already settled (the request is done) method cancel() has no effect.

For example, if we don’t want to wait longer than 3 seconds the client code is the following:

A Note on Web Scraping: some sites don’t like being scrapped. Often scraping data for personal use is generally OK. But you should always scrap nicely. Try to avoid making hundreds of concurrent requests from one IP. The site may don’t like it and may block your scraper. To avoid this and improve your scraper read the next article about throttling requests.

You can find examples from this article on GitHub.

This article is a part of the ReactPHP Series.

Learning Event-Driven PHP With ReactPHP

The book about asynchronous PHP that you NEED!

A complete guide to writing asynchronous applications with ReactPHP. Discover event-driven architecture and non-blocking I/O with PHP!

Review by Pascal MARTIN

Php Scraping Library

Minimum price: 5.99$